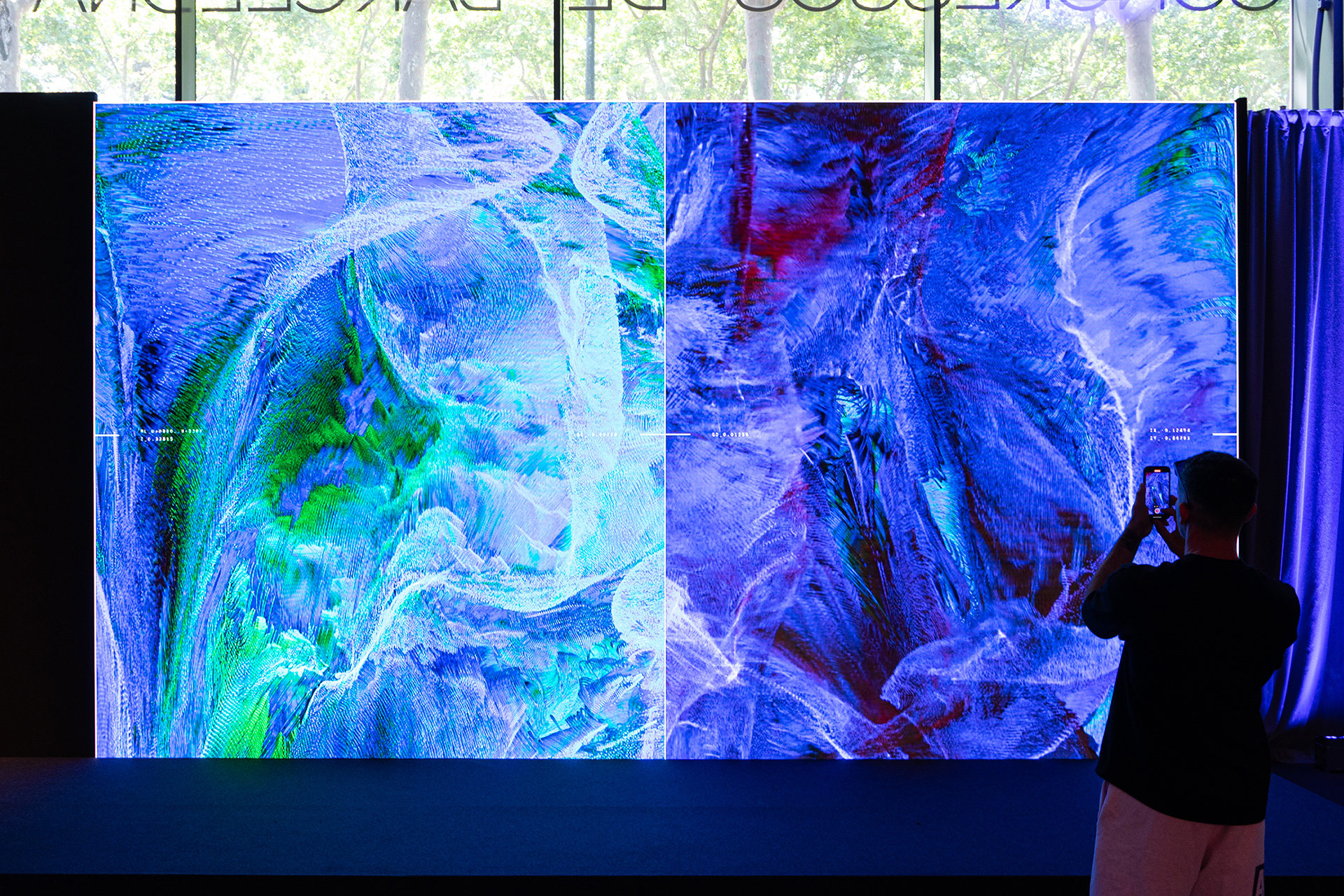

Abstract Language Model (Sync)

2023 — Video installation, 12 min, dim. 4x 2560 x 1440 px

Trained artificial neural network, custom software, 1- / 4-channel video

Trained artificial neural network, custom software, 1- / 4-channel video

For Abstract Language Model, an artificial neural network was trained with the entire charactersets represented in the Unicode Standard. The resulting complex data models contain the translation of all available human sign systems as equally representable, machine-created states. Through extraction and interpolation of these artificially created semiotic systems a transitionless universal language originates, which can be seen as a trans-human / trans-machine language.

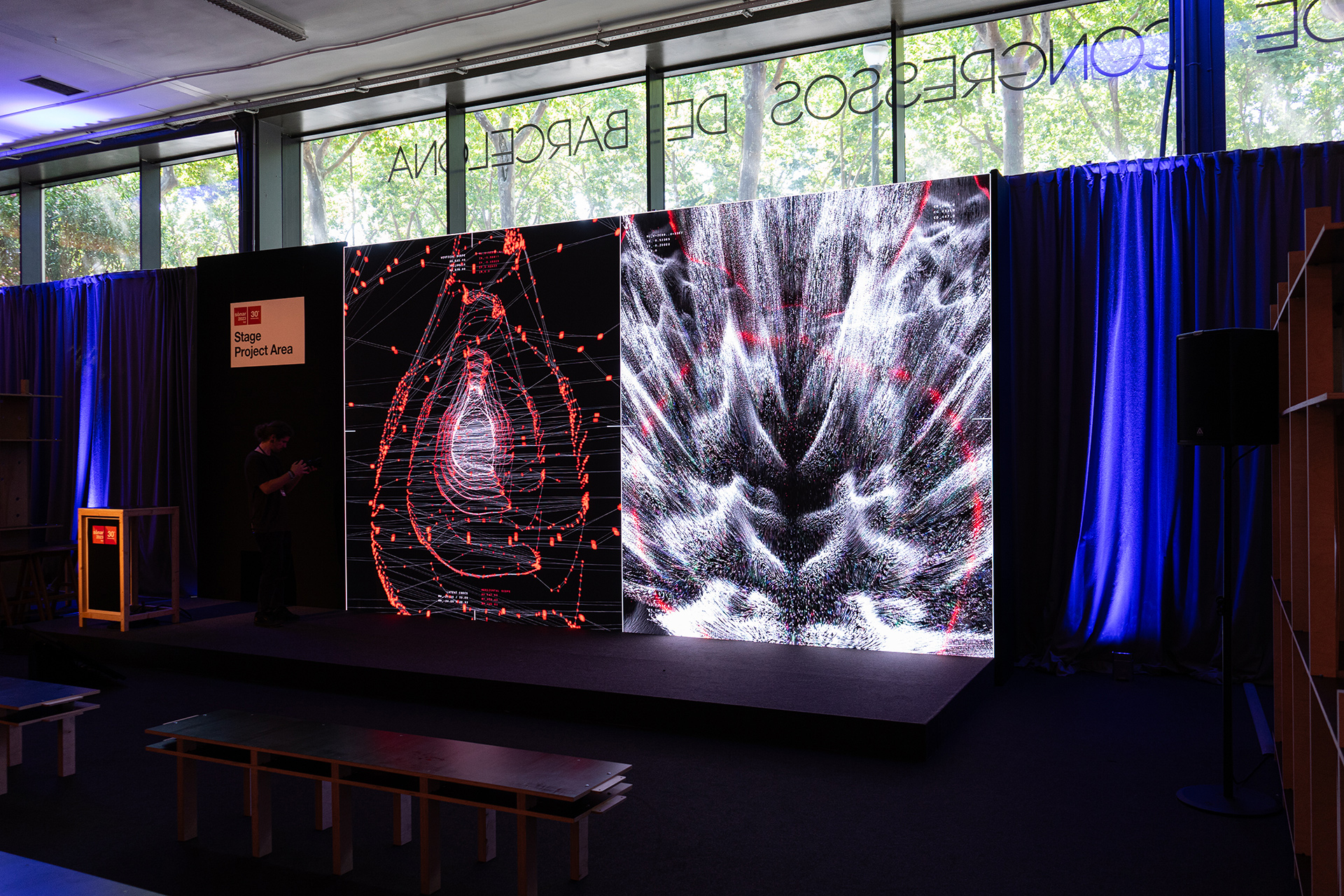

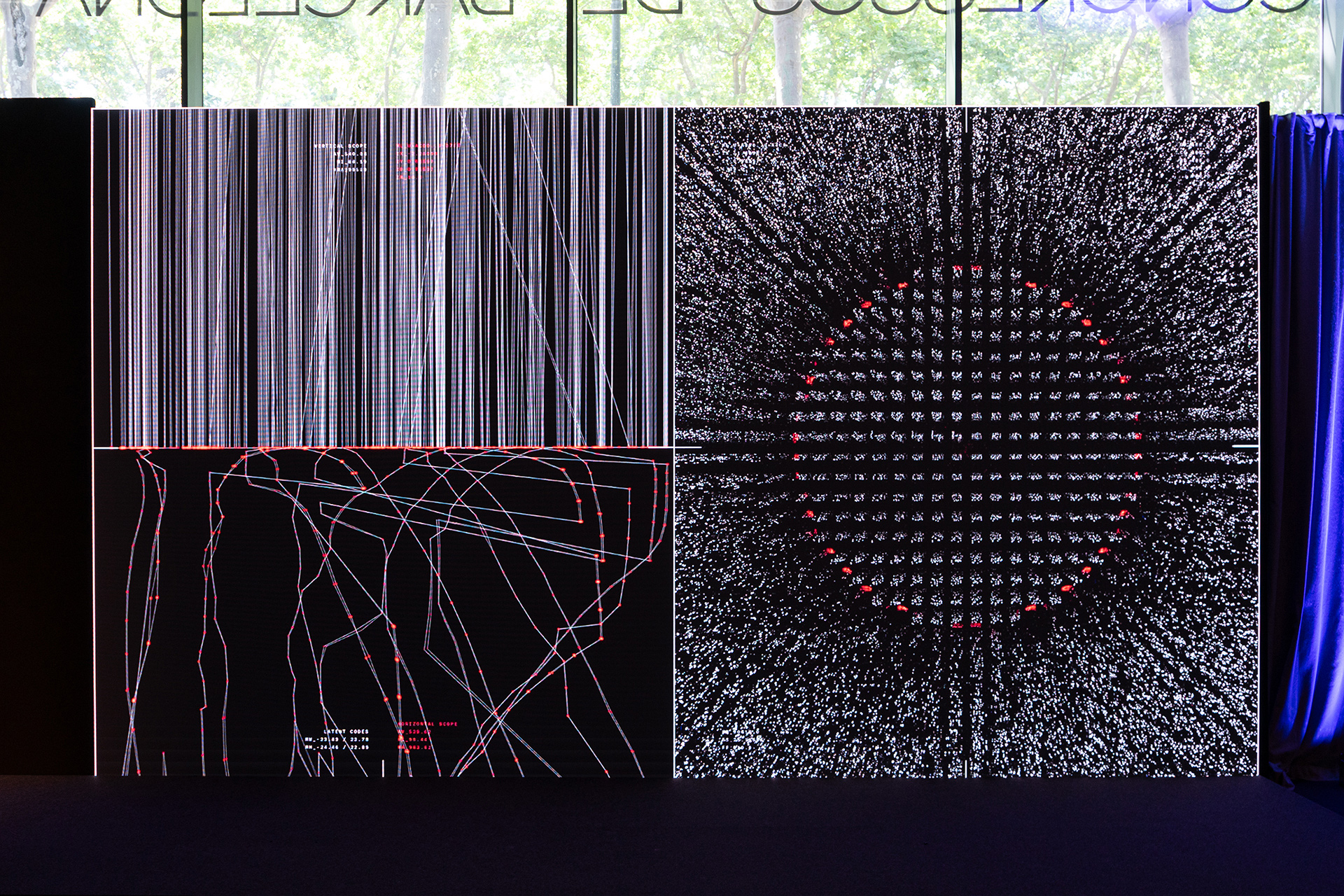

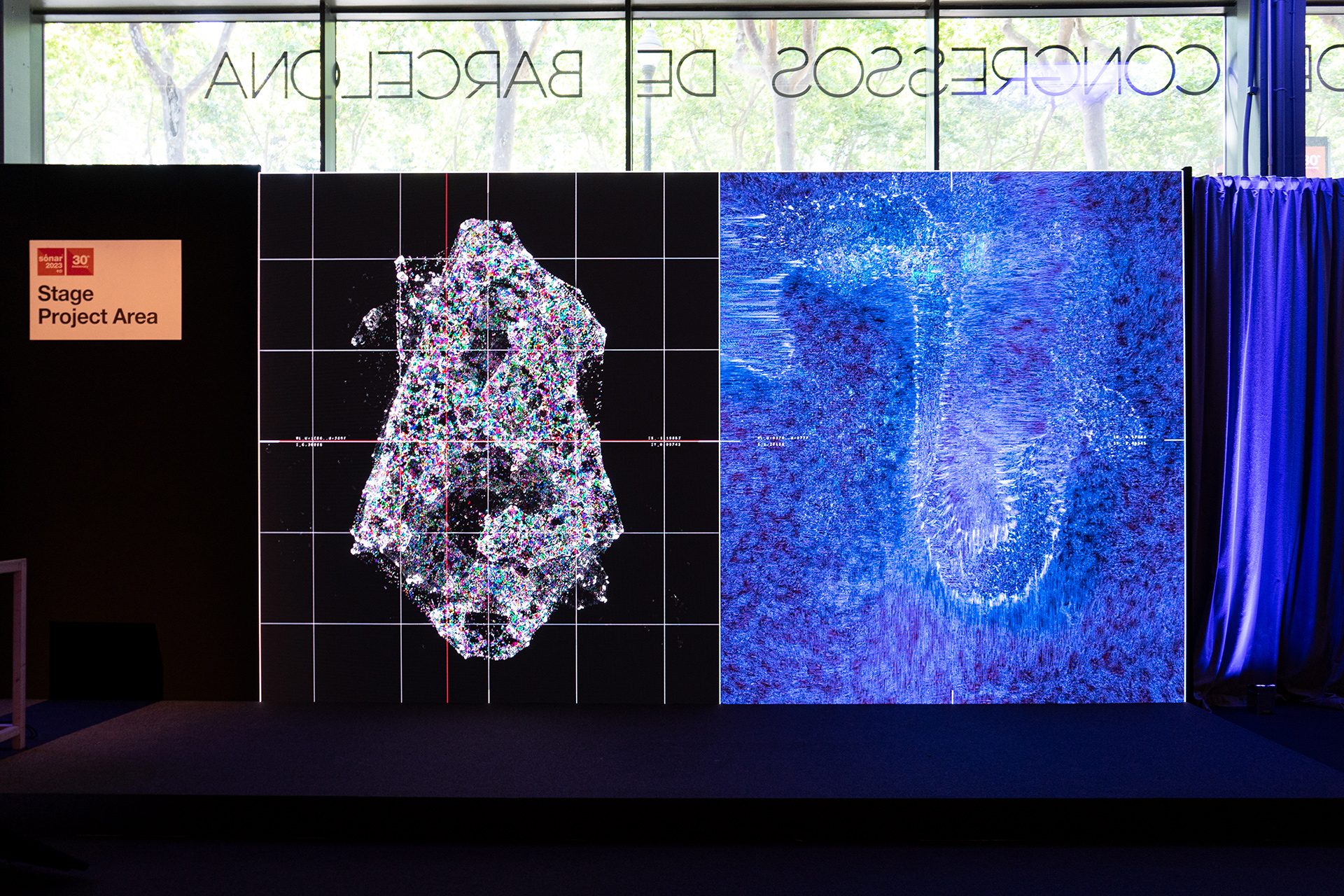

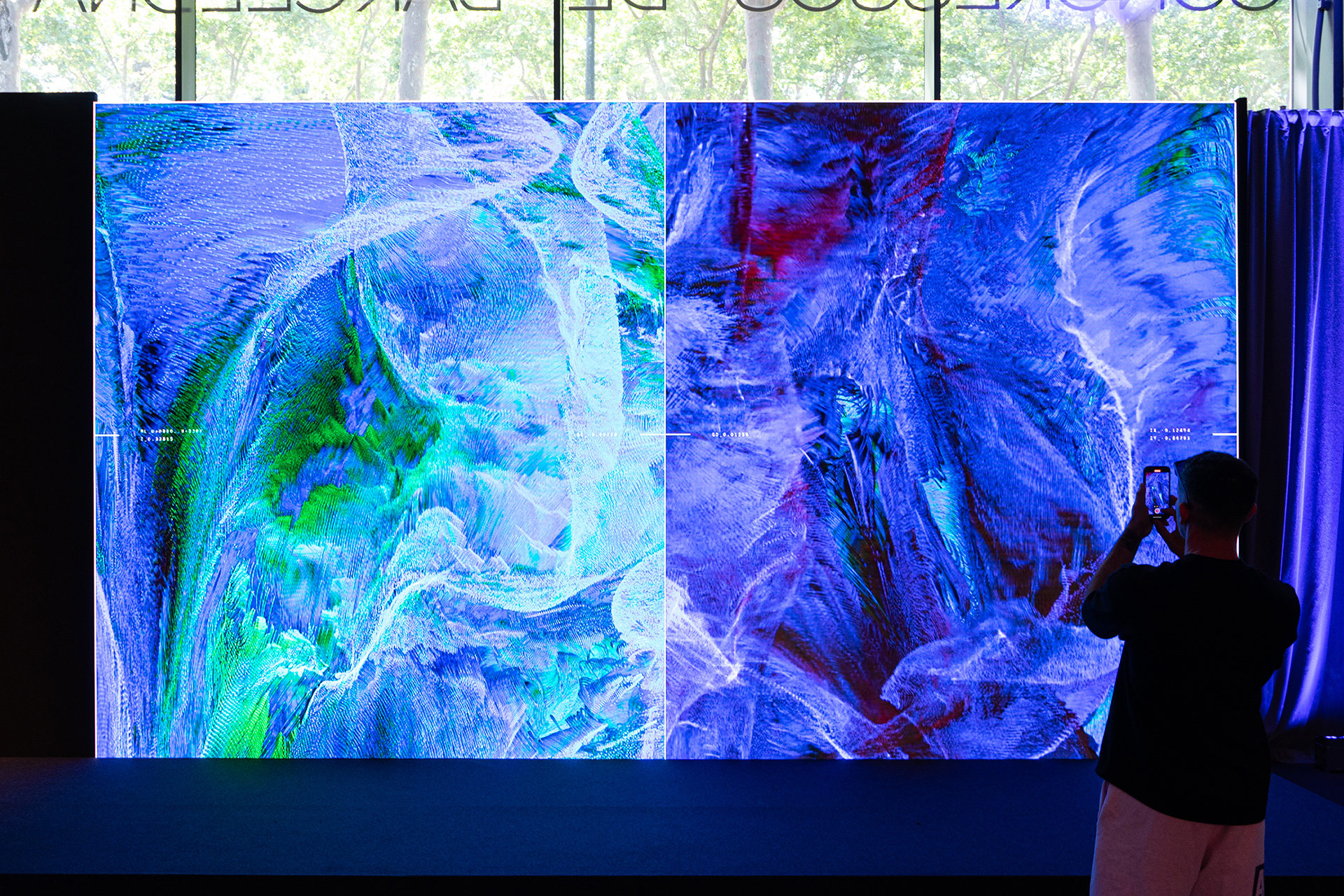

The visualization of these processes are displayed in the 4-channel video installation Abstract Language Model (Sync). Consisting of four synchronized visualizations with seven different states (Extraction, Analysis, Rearrange, Process, Transformation, Learning and Universal Language), the audio-visual sequence is based on a real-time interpolation through the trained models and depicts the transformation into a machine created semiotic system.

The visualization of these processes are displayed in the 4-channel video installation Abstract Language Model (Sync). Consisting of four synchronized visualizations with seven different states (Extraction, Analysis, Rearrange, Process, Transformation, Learning and Universal Language), the audio-visual sequence is based on a real-time interpolation through the trained models and depicts the transformation into a machine created semiotic system.

A detailed description for the research and process for Abstract Language Model can be found here (Abstract Language Model with Monolith YW, 2020-2022).

In situ at Sónar Istanbul / Turkey, April 2024 (Photos by Mete Kaan Özdilek, Sónar Istanbul and Zorlu PSM)

In situ at Sónar Istanbul / Turkey, April 2024 (Photos by Mete Kaan Özdilek, Sónar Istanbul and Zorlu PSM)

In situ at Sónar+D, Barcelona / Spain, June 2023 (1-channel version)

In situ at Sónar+D, Barcelona / Spain, June 2023 (1-channel version)