Abstract Language Model (Live)

2022 — Audio-visual performance, 45 min

Trained artificial neural network, custom software

Trained artificial neural network, custom software

For Abstract Language Model, an artificial neural network was trained with the entire charactersets represented in the Unicode Standard. The resulting complex data models contain the translation of all available human sign systems as equally representable, machine-created states. Through extraction and interpolation of these artificially created semiotic systems a transitionless universal language originates, which can be seen as a trans-human / trans-machine language. The live performance presents the states of this process from Extraction > Analysis > Rearrange > Process > Transformation > Universal Language with an audio-visual narration.

A detailed description for the research and process for Abstract Language Model can be found here (Abstract Language Model with Monolith YW, 2020-2022).

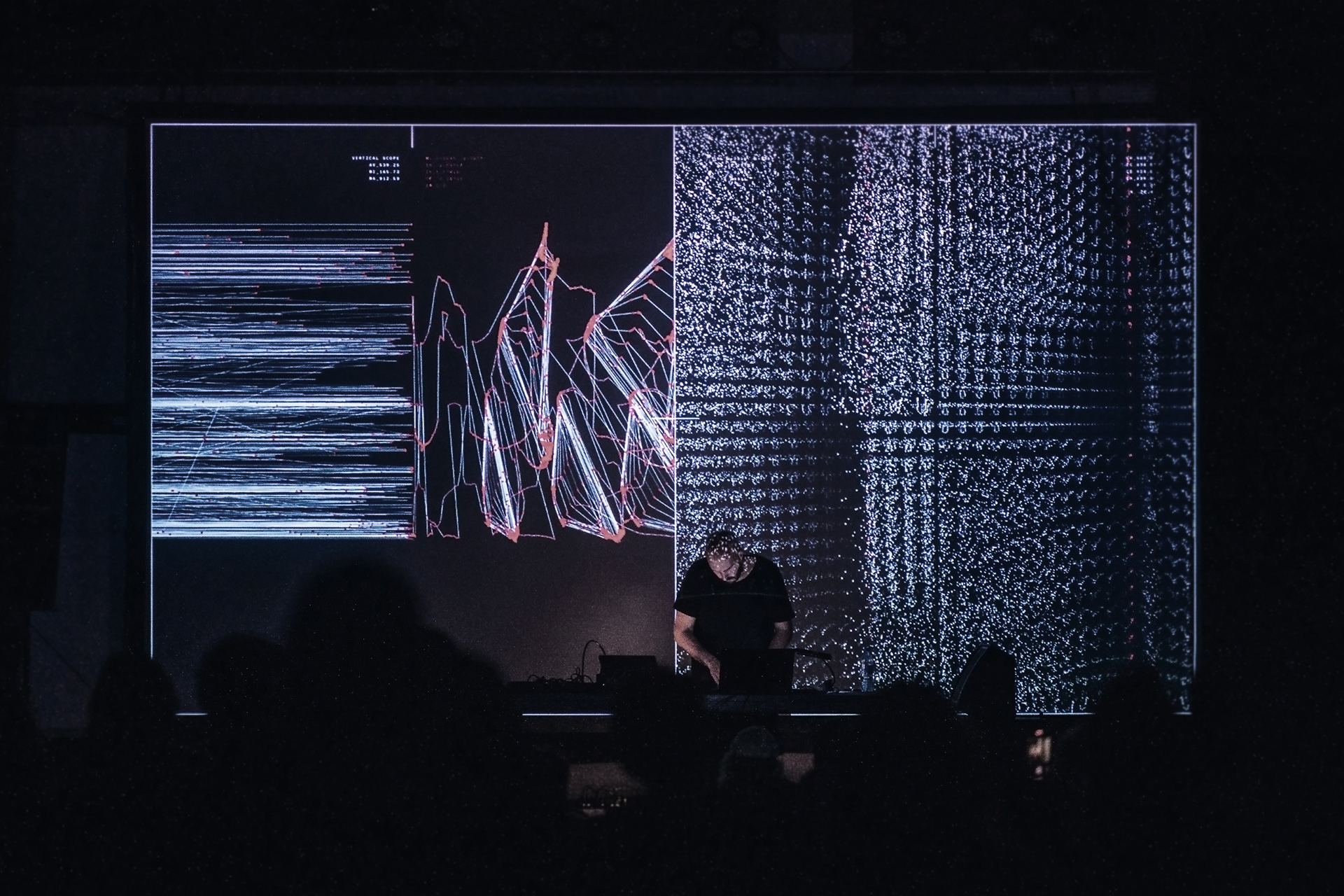

In situ at OSA Festival, Sopot / Poland, 2023 (Photos by Helena Majewska and Konrad Gustawski)

In situ at OSA Festival, Sopot / Poland, 2023 (Photos by Helena Majewska and Konrad Gustawski)

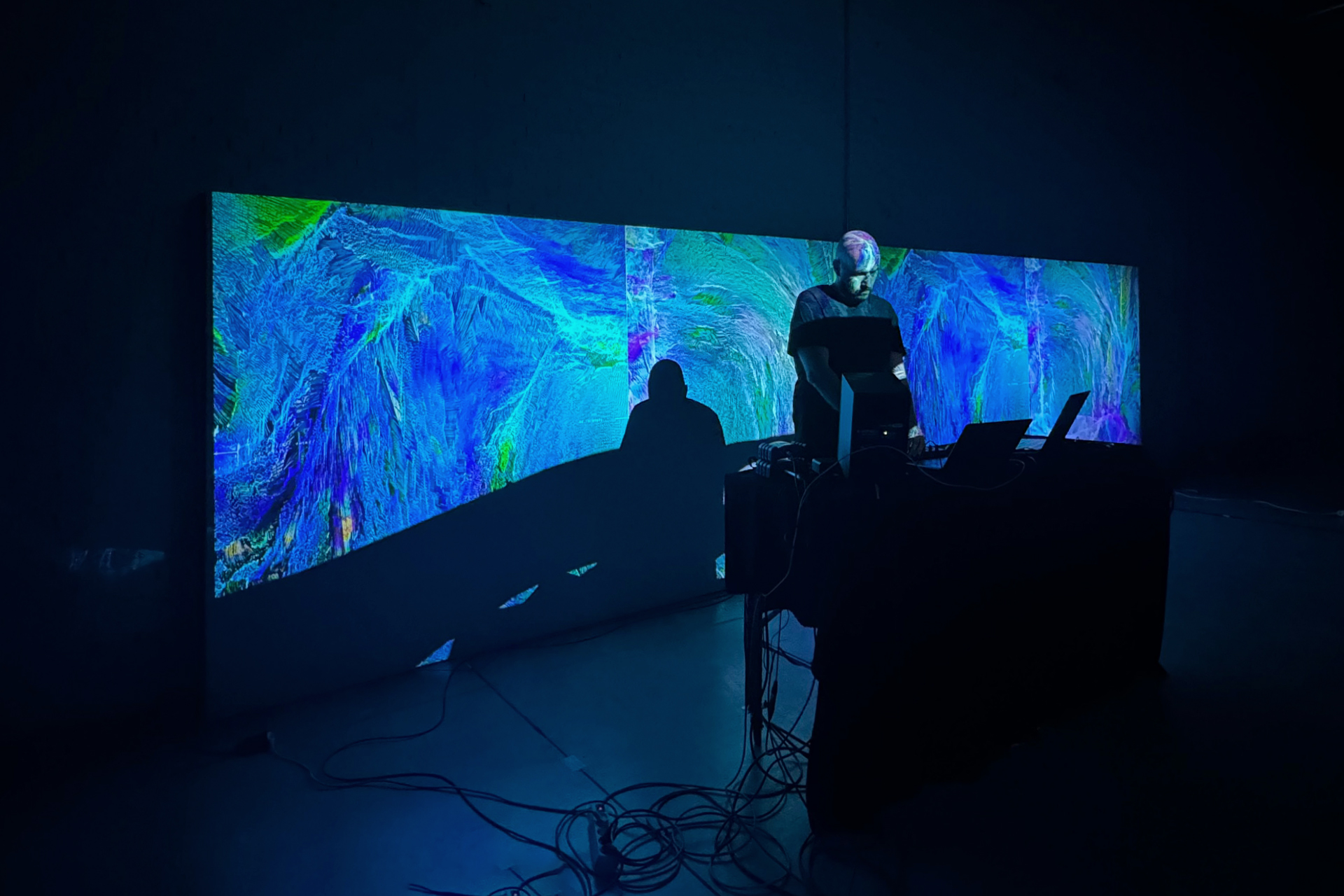

In situ at Medialab Matadero, Madrid / Spain, 2024 (Photo by Lucrezia Naglieri)

In situ at Medialab Matadero, Madrid / Spain, 2024 (Photo by Lucrezia Naglieri) In situ at Festival Zero1, La Rochelle / France, 2024 (Photo by Agence Luma)

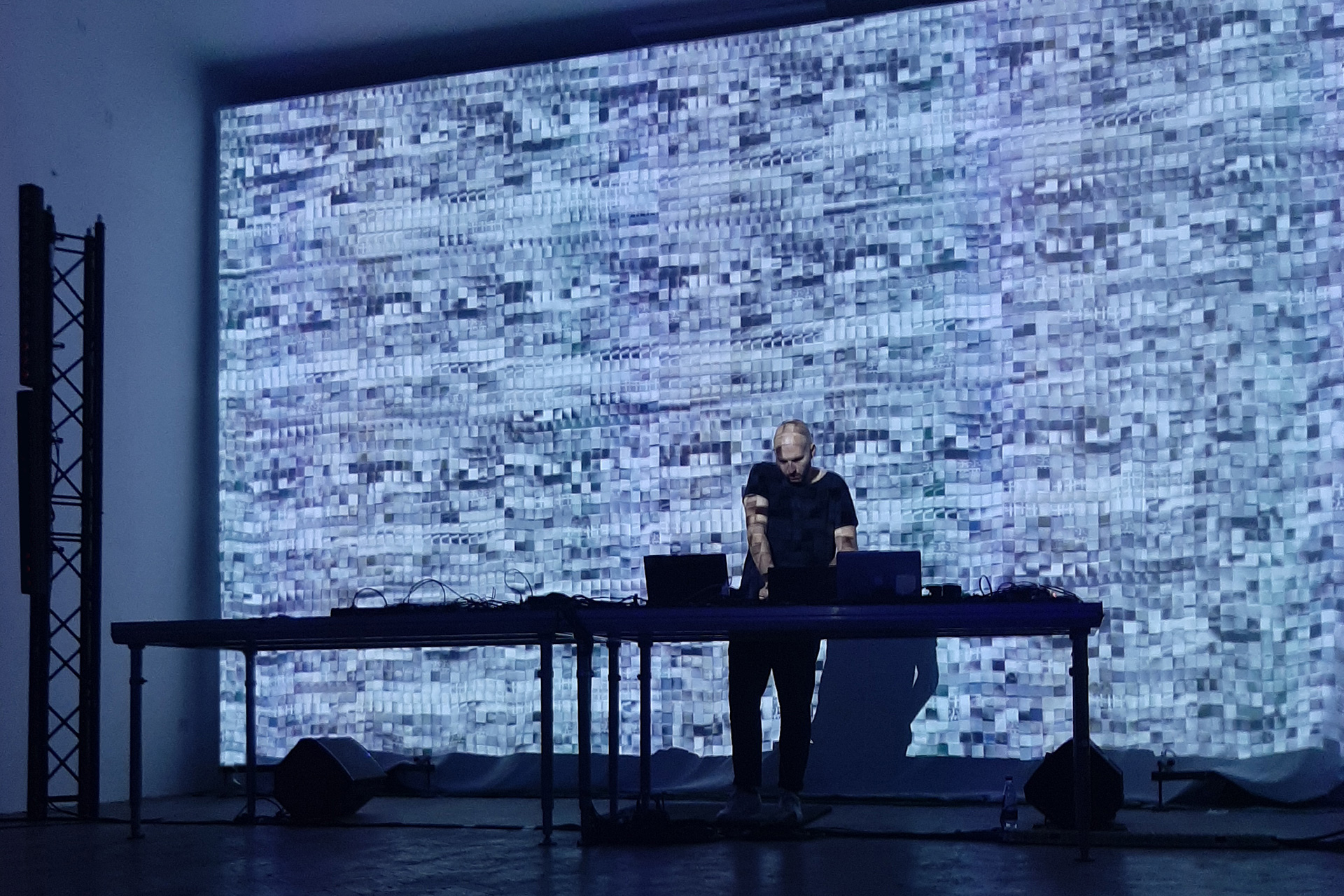

In situ at Festival Zero1, La Rochelle / France, 2024 (Photo by Agence Luma) In situ at Clujotronic, Cluj-Napoca / Romania, 2022 (Photo by Nicoleta Vlad)

In situ at Clujotronic, Cluj-Napoca / Romania, 2022 (Photo by Nicoleta Vlad) In situ at Alliance Française, Karachi / Pakistan, 2022 (Photo by Justine Emard)

In situ at Alliance Française, Karachi / Pakistan, 2022 (Photo by Justine Emard)